Traditionally, companies embraced a DevOps mindset to monitor internal and external events and gain operability. They used tools with dashboards, alerting and a pre-defined set of metrics, and logs to detect a known set of failures. But recently, a new term, “observability,” is gaining a lot of attention.

Dubbed as “monitoring-on-steroids,” data observability is becoming the holy grail in data engineering for anyone building out real-time capabilities that allow to explore the unknown-unknowns and more easily navigate from the effects to the cause.

In fact, the premise of observability goes beyond traditional log monitoring and troubleshooting applications. By allowing the questions to be asked and the systems to manage themselves, using artificial intelligence (AI) and machine learning (ML), observability fulfills the undisputed need in data engineering for better monitoring or a more inclusive debugging and diagnostics method that can handle the volume, velocity, and variety of the data being collected and find solutions to “unknown unknowns” in the complexity of today’s increasingly distributed systems and modern infrastructures.

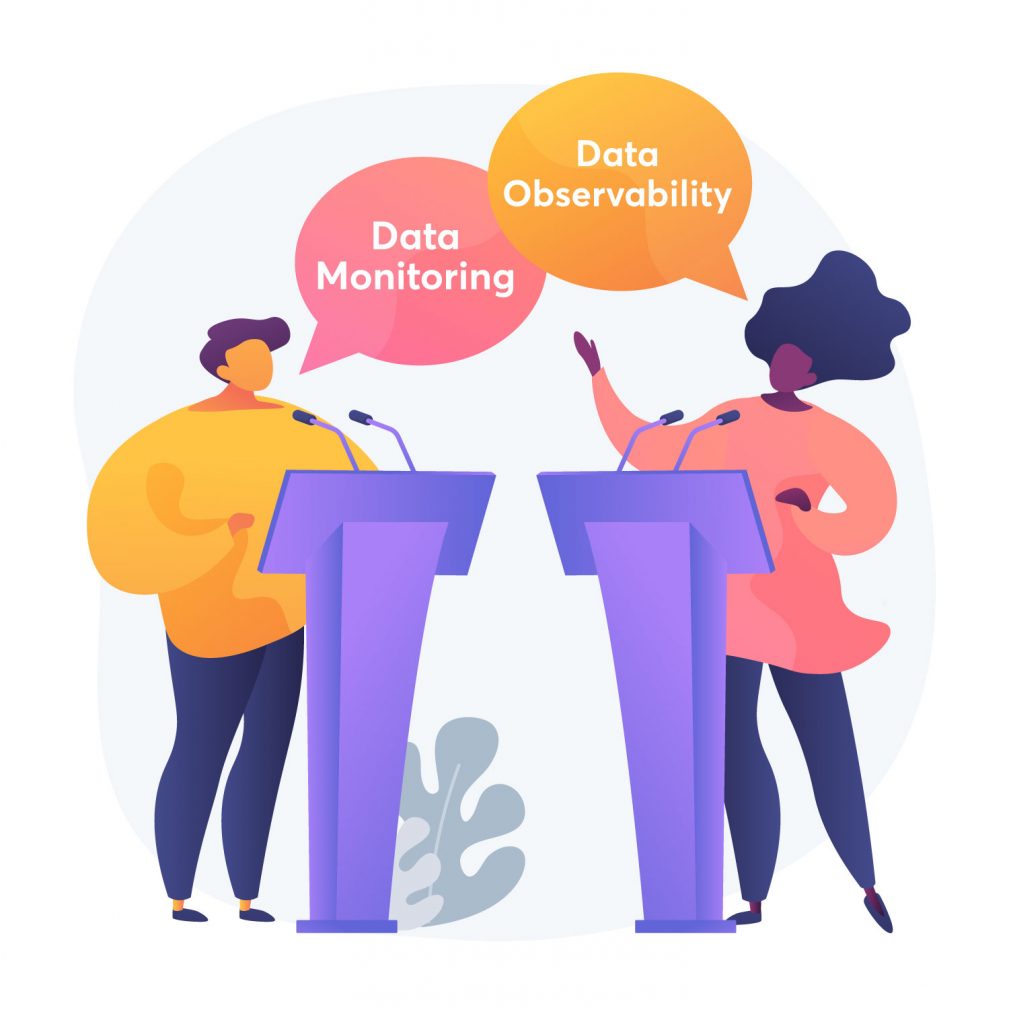

Monitoring and Observability are close cousins and complementary to each other.

Does this make data monitoring completely different from data observability? Nope! In reality, monitoring and observability are close cousins and complementary to each other that answer the most pertinent question – What’s broken and why?

While both concepts have been known for decades, their application in the software world and especially in the data world is something new. So this is a good direction as part of the evolution of stack and tools that we see in the data world.

Traditionally and typically,

- Monitoring tries to answer the what and when, while observability adds the context of how and why. So monitoring tells you whether the system works, and observability lets you ask why it’s not working.

- Monitoring works with a component view, and observability takes a system view.

- Monitoring uses pre-defined metrics, logs, and rules about a system (in other words, known unknowns), while observability helps us track the unknowns.

- Monitoring is failure-centric, but observability understands the system regardless of an outage.

Naturally, a few questions arise here! First, does this mean that monitoring is not enough when it comes to unknown unknowns or unpredicted failures? Is monitoring insufficient? Doesn’t it matter anymore?

The difference between data monitoring and data observability

The truth is that the difference between monitoring and observability is only in the definition rather than application, where you can find a significant overlap. But, undoubtedly, observability isn’t a substitute for monitoring and doesn’t eliminate the need for monitoring.

Instead of debating about the differences between data monitoring and data observability tools, we must discuss the need of the hour today, i.e., building an ecosystem where monitoring and data observability components co-exist to understand when/why something went wrong. This ecosystem is essential in an ever-changing world of data pipelines, and ML model pipelines either deployed on data ops or MLOps infrastructure.

In fact, it is a progression from having an accurate dashboard to automation of decision science using AI/ML. At the end of this journey, both the data and business teams must be able to ask and find the answer to the fundamental question – Is my data reliable?

As a leader in both monitoring and observing data and data quality for enterprises, Qualdo™ aims to solve both reliability and observability challenges by bringing together the following three principles:

- Being Proactive/Predictive rather than reactive – Where the Drift detection and Anomaly detection algorithms are an integral part.

- Having a unified view of both Enterprise Data and Data from ML-Models.

- Build the context of ‘why’ by using ML without having to create explicit rules. This helps to quickly improve the signal to noise.

By applying these principles, Qualdo™ offers peace of mind for the data-engineering and data science teams by addressing the two questions: What (symptom) is broken and why (cause)? It allows you to easily navigate from effects to causes.

To know more about Qualdo, sign-up here for a free trial.