Suppose your teams rely on data to drive every strategic decision. However, as your data flows grow more complex, you face an unsettling reality: undetected anomalies, delayed reports, and inconsistent data quality undermine your operations. This is where what is data observability becomes a critical question.

Every time a data issue occurs, it ripples through the business, eroding trust and causing delays in key decisions. It’s not just about monitoring data pipelines but ensuring real-time visibility into the health of your data. With observability, you can quickly spot anomalies, preempt performance issues, and ensure the integrity of your data across all systems, making it crucial for data reliability.

This blog will explore why data observability is essential for maintaining data reliability, its key components and challenges, and how tools can transform how organizations handle their data.

The Importance of Data Observability

Maintaining data reliability is not just about ensuring data is available—it’s about ensuring it is complete, accurate, and timely. Whether you’re running real-time analytics, generating customer insights, or producing financial reports, any issues with your data can lead to poor decision-making and operational delays. One of the biggest challenges for data teams is detecting and addressing these issues in real-time.

It provides a proactive approach to monitoring, ensuring that your data pipelines are working as intended. By continuously monitoring data flow, data observability tools can detect anomalies, such as unexpected delays or incomplete data, and alert teams before these issues cause downstream problems. This ensures your data remains reliable, helping your teams make timely, informed decisions.

Some of the key benefits include:

- End-to-End Data Flow Monitoring: It provides visibility into each step of your data pipeline, from ingestion to analytics, ensuring data integrity at every stage.

- Proactive Detection of Anomalies: Instead of responding to problems after they occur, data observability tools monitor real-time patterns to catch issues early on.

- Maintaining Performance and Data Quality: With real-time insights into data health, your teams can ensure that data meets the necessary quality standards, reinforcing the reliability of your business processes.

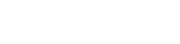

This proactive approach separates data observability from traditional monitoring methods, which typically focus on system performance and isolated metrics rather than the quality and flow of the data itself.

Key Components of Data Observability

Implementing observability requires more than just traditional monitoring tools. It involves core components designed to give data teams complete visibility into their data pipelines, allowing them to quickly maintain data reliability and address issues. These components include data lineage, monitoring metrics, and alerting systems.

1. Data Lineage

One of the most important aspects of data observability is data lineage, which tracks the entire lifecycle of your data—from source to destination. This provides a detailed view of where your data comes from, how it’s transformed, and where it ends up. When issues occur, data lineage makes identifying the root cause, such as missing or corrupt data, more manageable, allowing teams to resolve them more quickly.

For instance, if a data feed fails, data lineage can show precisely where the failure occurred, helping teams address it before it affects downstream applications or reports.

2. Monitoring Metrics

Monitoring metrics go beyond simple checks for uptime or system performance. Metrics like data completeness, freshness, accuracy, and volume can be tracked in real time to ensure data flows correctly and meets the required standards. By monitoring these metrics, data teams can proactively address issues before they become more significant problems, ensuring data reliability is maintained.

For example, if data arrives later than expected, observability tools can flag this delay and notify teams before it impacts reports or analytics.

3. Alerting Systems

A practical observability framework includes real-time alerting systems. These systems monitor for anomalies in your data and trigger alerts when something goes wrong. Unlike traditional alerts focusing on hardware or infrastructure issues, observability alerts are specific to data quality. They notify teams of topics such as schema changes, missing data, or delayed updates, ensuring quick responses to maintain the health of your data.

Leveraging these components provides continuous insights into the performance of your data pipelines, empowering data teams to act quickly and maintain data quality monitoring standards.

Challenges in Achieving Data Observability

While data observability offers significant advantages, implementing it is challenging. Many organizations struggle to achieve full observability due to factors such as data silos, complex architectures, and legacy systems.

1. Data Silos

One of the most common challenges is data silos. Data often exists in isolated systems across various departments or teams, each with its tools and processes. This fragmentation makes achieving an integrated view of data across the organization challenging, limiting the effectiveness. To address this, organizations must invest in tools and practices that unify data from different sources into a single, observable system.

2. Complex Architectures

Organizations’ data environments grow more complex as they scale, spanning multiple clouds, on-premises systems, and third-party services. Implementing observability across such diverse environments requires seamless integration of observability tools. Ensuring consistent monitoring across these different systems can be challenging, but it’s essential for maintaining data reliability.

3. Legacy Systems

Many organizations still rely on legacy systems not designed with modern observability in mind. These systems often lack the flexibility to integrate with newer observability tools, making it difficult to monitor data flow across the entire pipeline. In these cases, organizations may need to upgrade their infrastructure or develop custom solutions to achieve full data observability.

By addressing these challenges, organizations can unlock their full potential and ensure that their data pipelines remain reliable and efficient.

Best Practices for Data Observability

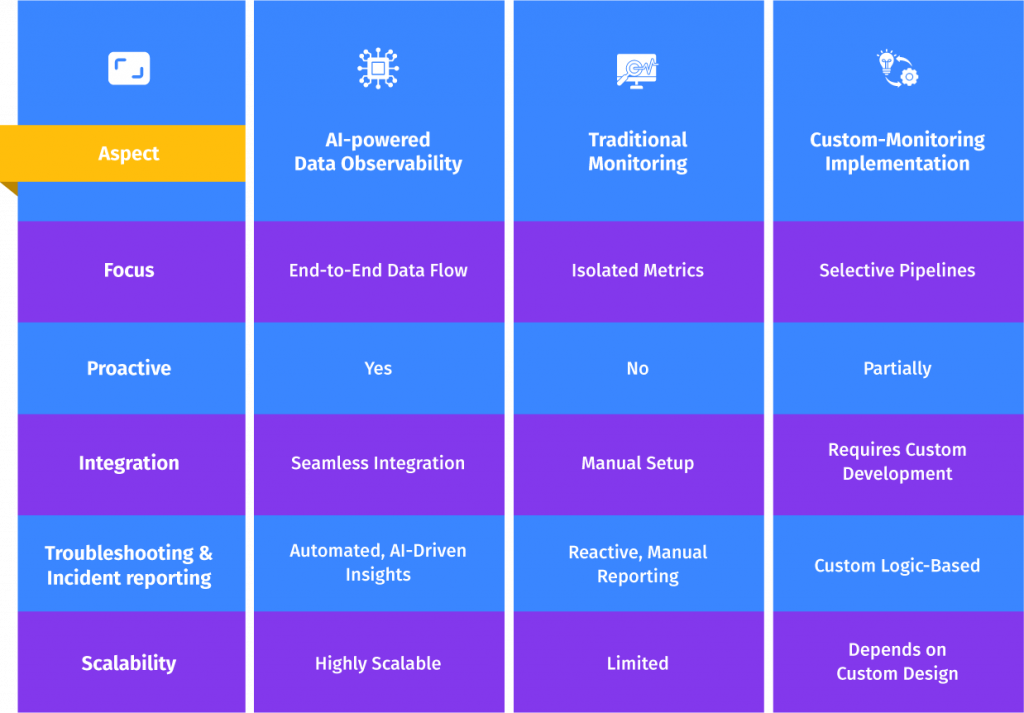

Successfully implementation requires following best practices that align with your organization’s needs and infrastructure. Here are some key practices to consider:

1. Centralized Monitoring Platform

Centralizing your monitoring efforts is crucial for achieving effectiveness. Instead of using multiple tools to monitor different aspects of your data pipeline, a centralized platform brings all your data monitoring into one place. This simplifies management and gives you a comprehensive view of your data’s health, ensuring that issues are identified and addressed quickly.

2. Automate Alerts and Responses

Real-time alerts are critical, but automating responses to those alerts can further streamline operations. By integrating alert systems with automated workflows, your teams can resolve common data issues without manual intervention, reducing downtime and improving data reliability.

3. Continuous Improvement

Your framework should evolve as your organization’s data needs grow. Continuously monitor your observability tools and make improvements as necessary. By refining your observability practices, you can ensure that your data pipelines remain reliable even as they scale.

Qualdo-DRX is designed to support these best practices, offering a comprehensive platform that integrates seamlessly with your existing systems and helps automate key observability processes. With Qualdo-DRX, organizations can achieve real-time data quality monitoring and maintain high standards of data reliability.

Conclusion

Ensuring data reliability is essential for organizations that rely on data-driven decision-making. Data observability provides the tools and insights necessary to maintain this reliability by offering end-to-end visibility into your data pipelines and detecting issues before they become business-critical problems.

With a powerful observability platform, you can empower your teams to monitor, maintain, and optimize data flow in real-time. By implementing Qualdo-DRX, your organization can improve data health, reduce downtime, and ensure that reliable, high-quality data backs your business.